How Meta is trying to make VR indistinguishable from reality

Ian Campbell

Input

As Meta continues its march to realizing the future of the mobile internet or “metaverse,” it both needs to get useful VR (and someday, AR) hardware in the hands of consumers and provide a compelling reason for why people should spend several hours a day with screens strapped to their faces.

In a presentation led by Mark Zuckerberg, Chief Scientist of Meta’s Reality Labs Michael Abrash, and several members of the Display Systems Research team, the group showed some of the company’s in-development hardware, that makes the case for just how realistic future VR displays could look. While the fruits of those labors are impressive, they’re still a ways off — many, many years off —and might not be what’s needed to justify the metaverse in the present.

“[The display] is in a lot of ways the last link in the chain,” Zuckerberg said. “It’s the component that takes the final rendered graphical output and converts it into the photons that that go arrive that you can see.”

The Visual Turing Test

Subjectivity — Meta believes the major selling point of virtual reality, augmented reality, and whatever mix you can experience in between them, is a sense of presence that’s altogether lacking in most digital communication. There are a lot of different ways the company is hoping to convey that “presence,” but the one they’re attempting to address with these prototypes is purely visual.

Can a VR display convincingly replace what you normally see in your day-to-day? Can VR images be indistinguishable from reality? Meta refers to these basic questions as a “Visual Turing Test,” inspired by the original Turing Test created by Alan Turing to see whether humans could be fooled into thinking a computer program is a person. Importantly, Turing’s original test and Meta’s new VR spin rely on subjective human experience. It doesn’t matter if an AI gives the right answer or VR headsets achieve a certain level of visual fidelity (Meta estimates that to convey the field of view of a human with 20/20 vision would require something greater than an 8K display). Humans have to be convinced first and foremost.

“I think we're in the middle right now of a big step forward towards realism and in the creativity that that unlocks,” Zuckerberg. “I don't think it's going to be that long until we can create scenes with basically perfect fidelity.

No VR headset currently passes the test, but Meta thinks its new prototypes are getting it ever so slightly closer.

“The human visual system is very complex and it's deeply integrated. Just seeing a realistic-looking image isn't enough to make you feel like you're really there. To get that feeling of immersion, you need all of the other visual cues as well that go with that.”

Four criteria — Meta’s Display Systems Research team settled on four display criteria to try and pass their test: resolution, focus, distortion, and high dynamic range (HDR). Resolution is largely limited by screen technology, but focus, distortion, and HDR can all be meaningfully improved in future VR hardware.

“We estimate that getting to 2020 vision across the full human field of view would take more than 8K resolution,” said Zuckerberg. “Because of some of the quirks of human vision, you don't actually need all those pixels all the time because our eyes don't actually perceive things in high resolution across the entire field of view.”

“Today’s VR headsets have substantially lower color range, brightness, and contrast than laptops, TVs, and mobile phones,” said Abrash. “VR can't yet reach that level of fine detail and accurate representation that we’ve become accustomed to with our 2D displays.”

“Basically, the challenge that we set for ourselves is to find out what it would take to get to that, retinal resolution in a headset,” Zuckerberg said.

As part of its research and development, Meta made a headset dubbed “Butterscotch” to achieve greater visual fidelity. It resembles a bulkier version of the Quest 2, but its extra display components makes it “nowhere near shippable” according to Abrash.

For focus, the main challenge is making VR behave more like how our eyes already work. Eyes are constantly focusing and refocusing on things as they look around the world, but VR headsets present a fixed focus, the display stays at the same distance even if we move virtual objects closer to our face. That can keep a VR experience from feeling as real as it could be.

Meta’s “Half-Dome” headset prototypes attempt to address this issue with varifocal displays that can change focal lengths depending on where you’re looking in VR. Moving an object closer to your face in VR mechanically moves the screens closer. “It’s kind of like how autofocus works on cameras,” explained Zuckerberg.

Later prototypes have achieved the same effect electronically, and in the tests the research team ran, a majority of people preferred varifocal to fixed focus VR. “They experienced less fatigue and blurry vision,” Abrash said. “And they're able to identify small objects better and had an easier time reading text, and reacted to their visual environment more quickly.”

Similar adaptations have to be made to account for distortion. Our eyes are round, but so are thick VR lenses. Traditionally, Meta uses software to curve images so they’ll look right while you’re actually wearing a Quest 2, but distortion actually changes depending on where your eyes are looking, which means for images to appear static and realistic, you also have to do some kind of eye-tracking.

“The problem with studying distortion is that it takes a really long time,” Abrash said. “Just fabricating the lenses needed to study the problem can take weeks or months. And that's only the beginning of the long process of actually building a functional display system.”

To speed up this research into distortion correction, the Display Systems Research team built a virtual simulator that uses “virtual optics and eye-tracking to replicate the distortion that you would see in a headset... and then display it using 3D TV technology.” This not only shaved prototyping time from months down to minutes, according to Abrash, but also allowed them to not have to build actual expensive headsets, further reducing costs.

High dynamic range is all about brightness and color reproduction, but according to Meta, fitting an HDR-compatible display into something as small as the Quest 2 (or ideally smaller) presents challenges. In some of Meta’s experiments, like its “Starburst” headset, bright HDR-compatible displays meant the headset had to have giant handles to be manageable.

“The vividness of screens that we have now compared to what the eye is capable of seeing in the physical world is is off by an order of magnitude or more,” Zuckerberg said. Meta believes for VR to achieve realism that’s comparable to reality, its headsets will need to go beyond 10,000 nits of peak brightness. Abrash said in its research, that 10,000 nits is the preferred brightness for TVs, even though few TVs currently get that bright, and most max out just above 1,000 nits.

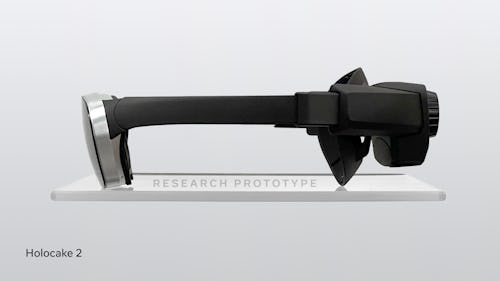

Holocake 2 and Mirror Lake

Holocake — These experiments have led to impressive progress. Meta bundled some of the results of its different work on focus, distortion, and HDR into a new prototype it’s calling Holocake 2, “the thinnest and lightest VR headset [its] ever built.” The device has some surface-level similarities to the design of Project Cambria, the high-end mixed-reality headset Meta plans on releasing later this year, but features all-new internals.

To shrink the overall size, Holocake 2 trades a traditional curved lens for a “hologram of a lens,” to borrow Zuckerberg’s description. Holographic lenses are slimmer and flatter than normal lenses, but still direct light in a consistent pattern. Combined with “polarization-based optical folding” (what’s sometimes called “pancake optics”) the Holocake 2 is slim while delivering the same performance as Quest 2, only tethered to a PC.

The Future — Mirror Lake, a concept Meta’s exploring but hasn’t successfully built in any form other than 3D renders, takes the idea further, combining the size improvements of Holocake 2 with all of the varifocal goodness and HDR performance of the Display Systems Research team’s other experiments. Mirror Lake looks a bit like ski goggles and incorporates varifocal displays, eye-tracking, and holographic pancake optics. A real —though not yet physically real — look at what Meta’s future mixed-reality hardware could look like.

A reason to exist

It definitely seems like, with enough time and money, there’s a good chance Meta will create VR displays that can create convincingly real reproductions of the world. But in the present, that’s not really the problem.

Skepticism lingers around VR and “the metaverse” not just because of Meta’s pedigree, or the accessibility of VR hardware, but because there still hasn’t been a completely coherent case for what people will be doing in VR. Meta’s continued to expand its flagship software suite of Horizon Workrooms, Horizon Venues, and Horizon Worlds. But there’s no real sense of how or when these pieces will fit together — or if developers are working on apps that will work together with them in a way that makes sense.

“We need a reason to put on these fancy VR headsets right now.”

Meta was clear that its presentation of new prototypes and the research it’s been doing with displays are really just one part of a bigger solution to create realistic VR and AR experiences. I can’t criticize them for not showing me something they never intended to show. And as part of its plan to have two VR lines — one expensive “prosumer” line with more experimental features, and a consumer line sold at cost — expensive VR display tech could eventually be accessible to everyone.

But as impressive as these future displays are, I can’t help but think they’re being shown off at the wrong time. We need a reason to put on these fancy VR headsets right now, not just the promise that they’ll be even better in the future. To put it another way, the picture’s starting to come into focus, but it’s not clear if it’ll happen fast enough to justify all the work Meta’s doing.

Continue Reading